In this article we look at cloud security. With all the hype around Cloud, it’s easy to make assumptions as to what it can do, but security is an area where making assumptions can prove very costly, both financially and reputationally.

This blog will look at the cloud security model, security features offered by cloud providers and areas to consider when using a SaaS vendor or building your own cloud-based solution.

I’m puzzled, doesn’t the cloud handle all my security?

No – unfortunately they don’t, and this can be a major misunderstanding.

Cloud providers have millions of customers and cannot anticipate what they will require in terms of security, so instead they offer “Shared Responsibility Models”, which explain what they will secure, and what remains the responsibility of the customer.

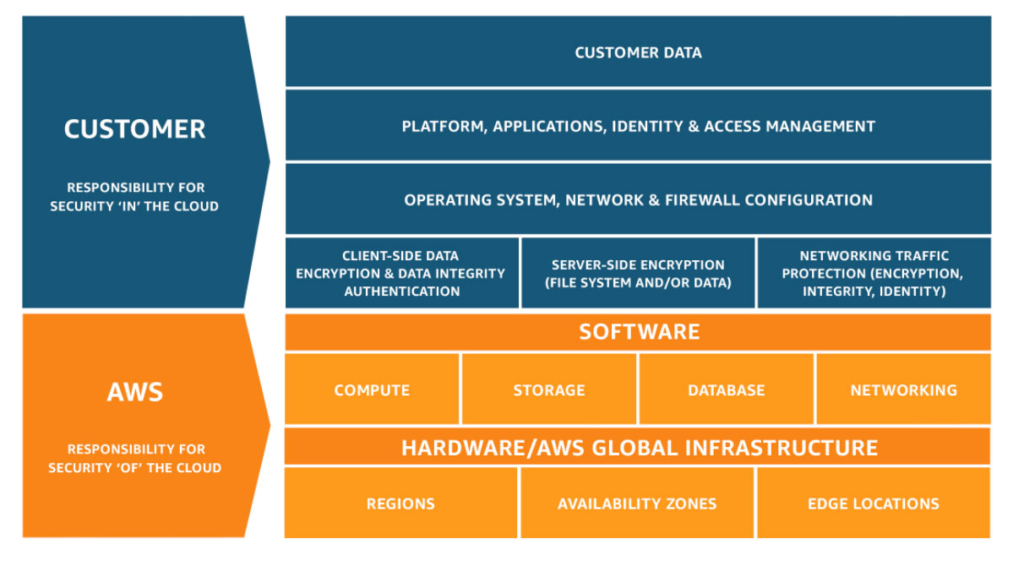

For example, here is the model from AWS:

AWS shared responsibility model

What this says in effect, is that AWS ensures full security over its datacentres and network (its infrastructure), as well as the cloud services that it runs within that infrastructure and makes available to its customers. Cloud providers do this so well that they have multiple compliance certifications compliance certifications (PCI, SOC, HIPAA, ISO etc.) to prove it.

But customers must still think carefully about security.

Cloud services have many features, all of which can be modified by customers and which can affect security. If a customer changes the settings of a service so that data can be accessed in unencrypted form, then this is a security breach of the customer rather than the cloud, as the security features were available but not used.

How to think about securing cloud solutions

A good starting point is to think through the consequences of a data breach, hacking, or a lack of access (perhaps via a denial of service or ransomware attack). The consequences may not just be financial; reputational costs and regulatory impacts may also occur.

Clearly impacts will be different for each system: the security risks of a SaaS travel booking vendor are far less than a cloud based, critical transaction processing system, so a risk-based approach should be used.

Having understood the level of risk, appropriate mitigations can then be identified by considering three key areas: Design, Operations, and Monitoring/Response.

1. Design – first layer of defence

Given the sophistication of today’s security attacks, solutions should now consider security as a core business requirement and follow the principles of “Security by Design“.

Designs should include multiple layers of security, each with having a default position of not allowing access unless needed, and then only providing the minimal access required.

Cloud providers offer many features and tools to help design in security, such as isolated networks, encryption of data in transit and at rest, IAM (identity access management) etc., and as cloud adoption has grown, many best practises have emerged.

To get a view on the solution’s security with your IT team or with a SaaS vendor, start by asking open ended questions covering particular scenarios, phrasing them as ‘how would someone do…’ rather than ‘can someone do…’, to force thinking about the possibility of the scenario. Then challenge assumptions as to why the scenario isn’t thought possible and what controls in the design prevent it: ideally, responses should be framed in terms of best practises.

I’ve listed a few examples of these at the bottom of this blog, to illustrate how simple-to-implement design choices can have major security benefits.

2. Operational – second line of defence

Lax operational controls could compromise a solution, regardless of how well it is designed.

For example, suppose a solution is designed to have no internet access, but operational access is via the internet and support staff have high levels of permissions. An attacker able to assume the support role may be able to open other pathways to the internet, enabling future attacks or extracting data. This new route might go undetected if internet access wasn’t being monitored (based on the expectation of no internet access in the design).

Automation of operations is a key step to reduce these types of risk.

Clouds include services that can automate the creation of environments (e.g. AWS CloudFormation) and solution deployment into them (e.g. AWS CodeDeploy), and these should be used, rather than making manual changes.

Automation ensures that a new environment will be built as it was designed (and tested) for each release of the solution, reducing the risk of any accidental or malicious reconfigurations, and minimising the need for human access into the environment.

When operational issues do arise, human access may be needed. Another key step is to define clear processes for authorising time-limited manual access, determining the minimal privileges to be granted in these situations, and auditing activity.

To get a view on the solution’s operational security, start by asking how the solution will be deployed, how it is maintained and how incidents will be handled. The objective here is to push for maximal automation and to highlight when manual activity is needed, so that the security implications can be understood.

3. Monitoring / Reponse – third layer of defence

Having designed a secure solution and understood access patterns, the next layer of defence is to put monitoring in place that continually checks the solution is running as expected.

Cloud providers offer audit trails, application/network logging and configuration monitoring services (e.g. AWS has CloudTrail, CloudWatch, Flow Logs and Config) to help develop sophisticated monitoring capabilities. Pub/Sub services can then be used to alert security and support staff.

Monitoring should be isolated from the underlying solution, with data stored immutably to ensure that an attacker cannot hide their tracks by changing logs.

Monitoring can then be used to trigger automated responses to security events, with the action applied in near real-time. The set of events and their responses can be added to continuously, ensuring the environment continues to be protected as new attack vectors appear.

For example, a response to an severe security event might be to ringfence the existing solution, then replace it by building a new environment and redeploying the solution. This stops the compromise quickly with minimal impact on the solution’s users, whilst maintaining the state of the compromised environment for subsequent forensic analysis on the attack itself.

Summary

Security is a major challenge for any solution, but Cloud providers offer many services that SaaS vendors can use to help construct a layered defence.

Threats continue to evolve, and incidents can highlight assumptions or shortcomings, so expect to have an ongoing dialogue about security with your IT team or SaaS vendor, to ensure that the solution’s risks are still being adequately mitigated.

Selected design best practices

- Isolate systems from each other both at the network level and via server firewalls, with access provided only when strictly needed.

- Prevent system access via the internet, instead only via the private dedicated networks available from each cloud provider. If access from the internet is required, then this should be provided indirectly via proxy servers.

These straightforward approaches improve security by simply removing access from unapproved sources, but can be unfamiliar to organisations running “flat” networks in which all servers can communicate with each other.

- Limit access to cloud services to only those components that need it

Using appropriate “IAM roles” adds another layer of security very easily. Why allow all solution components access to decrypt data, if only one component actually interacts with the data storage layer? Creating separate roles for each of the components in the solution is trivial and helps prevent unwanted access or data loss.

- Ensuring all data flows between components are encrypted

When data flows to the cloud services are encrypted but not those between the solution’s components, not much has been achieved – so ensure all data flows are encrypted. If the solution uses Containerisation, then this can be introduced quickly and transparently using software such as Istio.

- Encrypting all data at rest, using keys you control

Using your own encryption keys gives you more fine grain control over the encryption process, but also allows you to walk away from a cloud (or a SaaS product) without having to worry about data deletion: once the key is destroyed, the data cannot be decrypted and so is effectively destroyed.

- Regular recycling of the solution’s infrastructure

There is always the risk that an attacker succeeds in accessing a server. However by using load balancers and auto scaling of servers for each of the solution’s components, servers can be regularly deleted and recreated, limiting the time the attacker has for malicious activity before the server is destroyed. Auto scaling ensures that a replacement is quickly started, so users of the solution won’t usually notice any change in availability or latency.